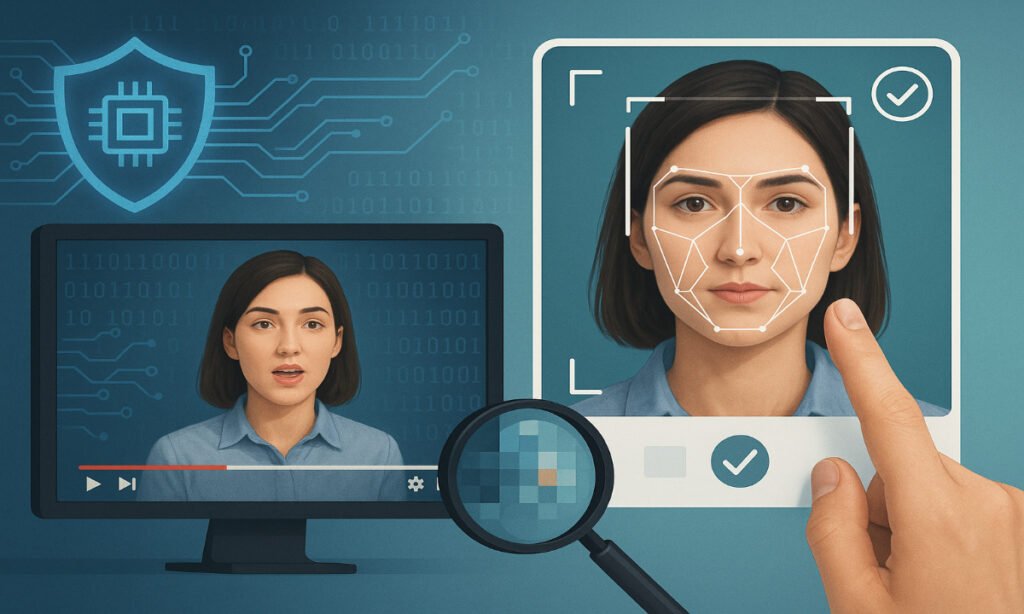

With AI getting more capable, deepfakes are advancing at an unprecedented rate. In 2025, AI-driven deepfake videos, voice clones, and images are so convincing that even experts are not able to tell what is real or fake. These fabrications feed misinformation, identity theft, political misdirection, and financial fraud. In response, a new breed of deepfake defense technology is emerging—high-tech tools that can authenticate online reality, verify sources, and uncover manipulation within seconds.

This is a turning point for digital trust. Tech companies, governments, cybersecurity firms, and social platforms are introducing sophisticated detection tools to combat the increasing threat of AI-generated deception. In this article, we’ll look at how deepfake defense technology works, why it’s critical in 2025, and what new technology is changing the future of online safety.

What Is Deepfake Defense Tech?

Deepfake defense technology is a collective term for specialized software, AI models, and verification mechanisms that can be used to identify synthetic media. They scan content – videos, audio, images, text – for manipulation and signs of being generated by AI. These tools differ from traditional fact-checking methods in that they are used in real time, enabling them to provide real-time verification.

Deepfake defense tech usually consists of:

- AI deepfake detectors

- Digital watermarking and media signatures

- Authentication based on the blockchain

- Biometric integrity tools

- Source verification systems

- Automated misinformation filters

Together, these are layered technology products that create a multi-tiered digital shield to support users and platforms in differentiating truth from falsehood.

Also read- FOK959S-M Model: Identification, Use, and Troubleshooting

Why Deepfake Defense Matters in 2025

Deepfakes have evolved beyond benign entertainment or viral content. They are weapons in financial fraud, political interference, revenge, ethics violations, corporate deceit, and focused misinformation. As generative AI models become more powerful, the need to defend digital reality will become more urgent.

Here’s why defense deepfakes tech is critical now:

1. Protection from AI Scams

Voice clones are now accurate enough to impersonate family members or CEOs, making for very effective scam attempts. Defense solutions have an opportunity to confirm authenticity, prior to any misplaced trust.

2. Protecting Elections and the Public Trust

Election cycles are a particularly good time for deepfake-driven misinformation. Defense technology is being adopted by governments, media organizations, and fact-checkers to halt the spread of disinformation at the source.

3. Preventing Identity Theft

Artificially created faces and images with AI-fabricated photos can deceive facial recognition systems if a weak security protocol is used. Advanced detection prohibits unauthorized entries.

4. Preventing Corporate Fraud

False emails, realistic copies of voices and fake video calls are the corporate equivalent of con men. Deepfake defence tech enables companies to detect and prevent impersonation attacks.

5. Supporting Social Media Safety

Platforms apply these tools to scan billions of uploads for suspicious content and curb misinformation generated using AI.

Also check- Crypto Comeback 2025: Real-World Asset Tokenization Boom

How Deepfake Defense Tech Works

Deepfakes employ AI to produce fabricated content, while deepfake defense technology leverages similarly potent AI to identify and analyze it. These tools scan a variety of digital media layers for anomalies that a human cannot detect.

1. AI Pattern Analysis

Sophisticated algorithms check micro-expressions, lighting inconsistencies, atypical pixel patterns, anomalous audio waves, and face morphing effects.

2. Audio Frequency Validation Verification

Irregular frequency gaps, robotic harmonics and different breathing sounds are common in deepfake audio. These abnormalities are detected by detection algorithms within seconds.

3. Metadata Inspection

There is metadata for every digital file. Deepfake defence systems examine timestamps, device information, compression logs, and signature mismatches.

4. Watermark Detection Recognition

All the platforms that are using these traces now are adding invisible digital watermarks to real content. Defense tech needs to verify these tags before it can trust them for authenticity.

5. Blockchain Source Tracking

With blockchain, content can be stamped at production. Every edit or upload is recorded, forming a transparent chain of custody that verifies the authenticity.

6. Biometric Integrity Checks

They measure several attributes, including natural eye movement, pulse detection via skin color changes, and pace of natural speech – all very difficult to fake.

Latest Innovations in Deepfake Defense Tech (2025)

1. Real-Time Browser Verification Tools

Extensions and built-in browser features now analyze videos on the spot and warn users if they contain deepfake signals. This makes safety for everyday users more democratized.

2. Smartphone Camera Authenticity Locks

Next-generation smartphones feature anti-deepfake camera signatures that verify real videos with encrypted watermarks.

3. AI-Powered Social Media Filters

Platforms perform deepfake detection on upload, which ensures synthetic videos never go live.

4. Enterprise Deepfake Validation Suites

Companies employ enterprise-level platforms to authenticate emails, calls, videos and remote meetings, and prevent fraud.

5. Government-Backed Reality Verification Systems

Some public institutions subscribed advanced forensic tools to check political content, viral posts, and suspicious videos flagged by the general public.

Benefits of Deepfake Defense Tech

- Boosts trust in online communication

- Protects users against financial frauds

- Stops impersonation and identity theft

- Bolsters national security and political transparency

- Aid social media in reducing misinformation

- Protects businesses against fraud

- Confirms media authenticity for journalism

- Adds protection against AI-enabled harm online

The implications of deepfake defense technology go far beyond detection, and it helps restore safety in an ever more synthetic world.

Challenges Ahead

These are the problems that still persist, despite the pace of innovation accelerating:

- Deepfakes mutate more quickly than detection mechanisms

- Advanced fakes can go through basic detection undetected

- Privacy concerns over use of biometric analysis

- No global regulation

- Imperfect AI models can produce false positives

- Overreliance on automated systems

As AI generation gets more sophisticated, detection tools will have to evolve in an ongoing race to stay ahead.

FAQs

1. What is deepfake defence tech?

These are tools and AI-based systems that can detect and signal if an online content has been manipulated or generated by AI.

2. How do deepfake detectors work?

They process pixels, audio, metadata and biometric signals to detect anomalies.

3. Is deepfake protection tech able to stop fraud?

Yes. It authenticates voices, videos, and texts to prevent impersonation and fraud attack.

4. Are social platforms implementing deepfake detection?

The majority of the big platforms have AI-based detection and are removing synthetic media.

5. Is it possible to eradicate deepfakes?

Not entirely, but empowering people with solid detection tools will mitigate harm and make the web safer.

Conclusion

The emergence of deepfakes has transformed the digital security environment, but deepfake defence tech is forming an equally strong counter. Utilizing AI forensics, watermarking, biometric analysis, blockchain authentication, and real-time verification methods, this innovative technology enables people to tell truth from lies.

As deepfakes become more advanced, so too must the countermeasures. Yet there is one certainty — now we must all verify digital reality. Deepfake defense tech isn’t a feature of a product, it’s the foundation of trust in the digital world of 2025 and beyond.